Customer Review Analysis and Neural Network Modelling - Part 1 - EDA and processing

Amazon Review Classification

Introduction

This blog post was initially written in 2022 as a summary of a in-class ML project. check this link. Several articles shed light upon the structure of the neural network I developed in this project, which gave me a basic idea of how to construct a neural network, as well as the modulized design behind the scene.

This link contains the files of the project.

In this blog, natural language processing and neural network are the major 2 parts that would be elaborate on, including several techniques, libraries that are usually would not be used when handling ordinary numerical data.

Task description

Customer review is important for the business to reflect on and improve the products/services. Due to the complex nature of human natural language, manually handing these unstructured data cannot fulfil the current business requirement.

Sentiment Analysis can be adopted to categorize and sort the opinions by positive, neutral, negative. (could be more nuanced in the categories) It helps to understand what customers like/dislike about the product/service.

In this project, machine learning methods are used to perform sentiment analysis & classification to a dataset which contains Amazon customer reviews on Kindle Book.

Dataset link: Amazon review data (ucsd.edu)

We only use 9000 samples from the dataset, with sensitive information pre-processed - thus only the review text (long text), summary (short text), and the rating (target, numerical, 1 to 5) are used in the modelling.

GOAL: build machine learning models that can predict the rating based on the text data.

The metrics used for evaluation is the F1-score (weighted average F1-score of each label as this is a multi-label task)

Procedures

First of all, importing all the libraries, setting random seed, configuration, read data, etc..

Click here for codes

# basic EDA libs

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

import missingno as msn

import warnings

import random

import math

# setting

warnings.filterwarnings('ignore')

pd.set_option("display.max_columns", 20)

from sklearn.model_selection import train_test_split

import re

import torch

# reproducibility (global setting)

torch.manual_seed(12)

np.random.seed(12)

random.seed(12)

# NLP libs

import pkg_resources

from symspellpy import SymSpell, Verbosity

from nltk.tokenize import word_tokenize

from nltk.corpus import stopwords

import string

import contractions

import html

import spacy

# run line below if the lib is never used before

# spacy.cli.download("en_core_web_sm")

spacyNLP = spacy.load("en_core_web_sm")

# read data

originData = pd.read_csv("train.csv")

# create a deep copy - make sure the original dataset is not changed.

dataset = originData.copy(deep = True)

I EDA

dataset.sample(3)

| rating | reviewText | summary | |

|---|---|---|---|

| 1306 | 4 | I really enjoyed this book because it ended on… | Surviving The Game |

| 4120 | 5 | This is a very dark and twisted tale of the da… | Very twisted |

| 4422 | 1 | Thank heavens this was an Amazon freebie when … | Failed resurrection. |

-

rating-numerical, the target -

reviewText- longer text review, feature -

summary- shorter text review, feature - check missing value

msn.bar(dataset, color='lightsteelblue')INSIGHTS: no missing value

target - rating

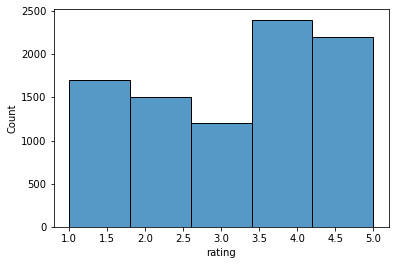

sns.histplot(data = dataset, x = 'rating', bins = 5)

INSIGHTS:

- This is a multi-classfication problem

- Classes have rank relationship from 1 to 5, but the number does not imply the ratio.

- slightly unbalanced dataset - 4 and 5 have more samples and 3 has less.

reviewText

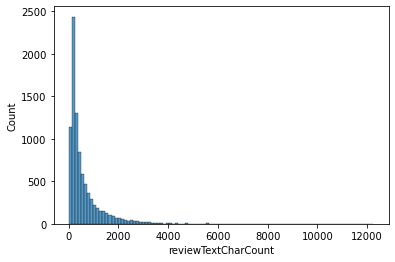

- A histogram for counting the characters in the

reviewText

dataset['reviewTextCharCount'] = dataset['reviewText'].apply(len)

sns.histplot(dataset['reviewTextCharCount'], bins = 100)

INSIGHTS:

The graph shows that there are some reviews that write a really long message while some just have a few words. Apparently right skewed.

summary

dataset['summaryCharCount'] = dataset['summary'].apply(len)

sns.histplot(dataset['summaryCharCount'], bins = 12)

The image shows that summary has much shorter length but it is also right skewed.

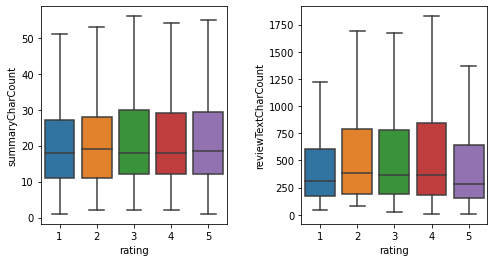

Relation between the target and a feature

The most accessible numerical feature is the length of review, plotting it against the rating to see if there is any difference.

fig, (ax1, ax2) = plt.subplots(1,2,figsize = (8,4))

sns.boxplot(x = 'rating',y='summaryCharCount', data = dataset, showfliers = False, ax= ax1)

sns.boxplot(x = 'rating',y='reviewTextCharCount', data = dataset, showfliers = False, ax = ax2)

plt.subplots_adjust(wspace=0.4)

plt.show()

INSIGHTS:

As is shown in the boxplot(outlier excluded), there is little difference in the length of reviews and summaries between different ratings.

Review text length is less on rating 1 and 5, with other ratings have longer text, the difference is not abvious though.

Relation between features

See if there is any similarity of words between two features. And plotting it against the target variable.

# define the overlap similarity score

# we use overlap instead of other methods

# because the difference in length between two columns are quite big

def overlapSim(str1, str2):

a = set(str1.lower().split())

b = set(str2.lower().split())

c = a.intersection(b)

return float(len(c)) / min(len(a),len(b))

overlapScoreResult = []

for i,row in dataset.iterrows():

s1 = row.reviewText

s2 = row.summary

overlapScore = overlapSim(s1, s2)

overlapScoreResult.append(overlapScore)

dataset.insert(loc = 4,

column = 'overlapScore',

value = overlapScoreResult)

#plotting the overlapscore

sns.histplot(dataset['overlapScore'],bins = 10)

There is a large proportion of reviews that its summary has no word similarity with its content.

However, this does not necessarily mean they do not have semantic similarity, more advanced techniques(using trained model / vectorized representation / after normalization) can be used to analyse the sementical similarity.

The reason why similarity is analysed here is that summary may not provide accurate attitude and will be elaborate on the longer reviewText.

II Data Pre-processing

Some of the deeper EDAs is better done after pre-processing because dirty data would influence the accuracy of the analysis.

Text is better transformed into numerical representation, in order to be fed to the model. Due to the fact that the Text-to-number is rather a complicated task for computer, cleaning the data is vital to mitigate the information loss/variation.

Here is a typical example:

-

'good', 'GOOD', 'Goooood', 'best', 'great', 'graet', 'good!'should mean the same thing with only slight difference in terms of form, puntuation, upper/lower case, typo, etc.. But the model may consider them as different word if only simple methods is used to transform them.

There are also many methods to tranform the texts into numbers:

- Bag of words

- TF-idf

- Advanced embedding method (vectorization)

NOTE that some methods may cause data leakage - methods that involve information in the test/validation set. - We should retrict these methods to be deployed in the training set only.

html entities

There are html entities in the text, which need to be converted into its readable form.

example: ' -> '

A python library html can deal with such entities

def htmlEntityTran(DF, columns):

for acol in columns:

DF[acol] = DF[acol].apply(lambda x: html.unescape(x))

contraction expansion

contraction should be expanded to its full text:

example: I'm -> I am

def contractionExpansion(DF, columns):

for acol in columns:

DF[acol] = DF[acol].apply(lambda x: contractions.fix(x))

punctuation processing

There are a lot of emoticons and misuse of punctuations in the text because the book reviews text is from online source without careful check.

In the process below, we define all the possible emoticons(not comprehensive) that can be identified and process them with its meaning.

The emoticon lists was copied from a repository in Github

Click here for codes

def punctuationProcess(DF, columns):

for acol in columns:

DF[acol] = DF[acol].apply(lambda x: puncFix(x))

smileEmo = r""":-) :) :-] :] :-> :> 8-) 8) :-} :} :o) :c) :^) =] =) ^_^ ^^ :') :3 :-3 =3 x3 X3 (: (-: ))""".split(" ")

laughEmo = r""":-D :D 8-D 8D =D =3 B^D c: x-D xD X-D XD C:]""".split(" ")

winkEmo = r""";-) ;) *-) *) ;-] ;] ;^) ;> :-, ;D ;3""".split(" ")

sadEmo = r""":-( :( :-c :c :-< :< :-[ :[ :-|| >:[ :{ :@ :( ;( :'-( :'( :=( :$ ): """.split(" ")

skepEmo =r"""

:-/

:/

:-.

>:\

>:/

=/

=\

:L

=L

:S

:-|

:|

-_-

""".split()

stunEmo = r""":-O :O :-o :o :-0 8‑0 >:O =O =o =0 O_O o_o O-O o-o O_o o_O""".split(" ")

def puncFix(row):

for emoticon in smileEmo:

row = row.replace(emoticon, 'smileface')

for emoticon in laughEmo:

row = row.replace(emoticon, 'laughface')

for emoticon in winkEmo:

row = row.replace(emoticon, 'winkface')

for emoticon in sadEmo:

row = row.replace(emoticon, 'sadface')

for emoticon in skepEmo:

row = row.replace(emoticon, 'skepticalface')

for emoticon in stunEmo:

row = row.replace(emoticon, 'stunnedface')

# ↑ emoticons processed

# ↓ special punctuations processed

row = row.replace('$$','ss')

row = row.replace('$','money')

row = row.replace('w/','with')

# the 2 steps below are adding spaces between punctuation and character.

row = re.sub(r"""(?<=[,.!'";:()*?/-])(?=[a-zA-Z])""", ' ', row)

row = re.sub(r"""(?<=[a-zA-Z])(?=[,.!'";:()*?/-])""", ' ', row)

# render the repeating punctuations consistent

row = re.sub(r"[...][.]+", '...', row)

row = re.sub(r"[--][-]+", '--', row)

# adding spaces before and after **

row : row.replace('**', ' ** ')

return row

This process could be improved with much more careful look into the data samples and find relevant improvement potentials.

A tool to check the special punctuations are provided later in this section

lower case

This process turn any upper case into lower case.

This process should be improved, there are several concerns regarding:

- upper case for intensify the emotions expressed

- upper case for abbreviation

If simply transform all the characteristics into lower case, there could be some important information being dumped.

In this project, we still define the function and use it with certain order (after some other procedures like counting uppercase amount) to avoid information loss.

# lower case

# A => a

def lowercaseCountTranformer(DF, columns):

"""

This function transform all the characters into lower case,

And store the number of upper case

"""

for acol in columns:

DF[acol+'UpperCount'] = DF[acol].apply(lambda x: sum(1 for c in x if c.isupper() ) )

DF[acol] = DF[acol].apply(lambda x: x.lower())

Lemmatization and Stemming

Stemming and lemmatization (stanford.edu) This website introduce the basic idea of lemmatization and stemming.

In short word, Lemmatization transform the word into its original form precisely, considering the spelling, part of speech, comparative form, etc. - needs more computation power but provide better results in general. Stemming is relatively simple - chops off the ends of words to collapse the derivationally related words.

EXAMPLE:

saw – lemma –> see – little information loss

saw –stem–> s – large information loss

There are still more considerations in choosing these two methods to clean the data, which is task-specific.

In this project, due to the small size of samples, we choose lemmatization which is provided by spaCy (standfordNLP library is too slow, I tried)

spaCy · Industrial-strength Natural Language Processing in Python

# lemmatization and stemming

# turn the word back to its original form

# tokenization

# turning a sentense into a list of words

def LemmatizationTransform(DF, columns, mode = "Lemma"):

"""

spacy is used but only the tokenization and lemmatization pipeline component is used.

NLTK(also standfordNLP) has some similar functions but lack the precision and speed

tokenization is also done by this step together in SpaCy

"""

for acol in columns:

if mode == "Lemma":

DF[acol+'tokenized'] = DF[acol].apply(lambda row: [token.lemma_ for token in spacyNLP(row)])

else:

DF[acol+'tokenized'] = DF[acol].apply(lambda row: [token.text for token in spacyNLP(row)])

Stop word removal

stop word removal process is also task-specific. The goal of this project is predicting the sentiment which has negative and positive directions - Some stop words would indicate a turning point in the meaning that would possibly impact the sentiment. We customize the stop word list to fit our purpose of the project.

# check the stopword removal wordlist

print(stopwords.words('english'))

Click here for codes

origin = set(stopwords.words('english'))

wanted = {'what','but','against','down','up','on','off','over','under','out','same'

'again','further','why','what','how', 'all', 'any','with'

'few', 'more', 'most', 'other','no', 'nor', 'not', 'only',

'than', 'too', 'very', 'just', 'should', 'ain',

'aren', "aren't", 'couldn', "couldn't", 'didn', "didn't",

'doesn', "doesn't", 'hadn', "hadn't", 'hasn', "hasn't", 'haven',

"haven't", 'isn', "isn't", 'ma', 'mightn', "mightn't", 'mustn',

"mustn't", 'needn', "needn't", 'shan', "shan't", 'shouldn', "shouldn't",

'wasn', "wasn't", 'weren', "weren't", 'won', "won't", 'wouldn', "wouldn't"}

unwanted = {'the','I',"'s"}

StopWordCustom_Deep = list(origin - wanted | unwanted)

StopWordCustom_Shallow = ["it's", 'their', 're', 'she', 'ours',

'it', 've', 'you', 'y', 'o', 'themselves',

'your', 'yours', 'm', "you'd",

"you're", 'and', 'its', "you've", 'that', 'ourselves',

'himself', 'this', 'been', "you'll", 'an', 'my', 'me',

'myself', 'a', 'these', 'which',

'he', 'his', 'I', 'them', 'the', "'s", 'yourselves',

'our', 's', 'yourself', 'theirs', 'herself', 'they', "she's",

'hers', 'we', 'those', 'him', "that'll", 'i', 'her', 'itself']

# stopword removal function

def stopWordRemove(DF, columns, deep = True):

for acol in columns:

if deep == True:

DF[acol] = DF[acol].apply(lambda alist: [item for item in alist if item not in StopWordCustom_Deep])

else:

DF[acol] = DF[acol].apply(lambda alist: [item for item in alist if item not in StopWordCustom_Shallow])

Punctuation removal

‘…’ and ‘!’ and ‘?’ would contain some information about the sentiment, other punctuations are relatively less informative.

# punctuation

# '...' and '!' and '?' would contain some information about the sentiment

punctList = set(string.punctuation)

puncwanted = {'!', '?'}

puncunwanted = {' '}

punctList = punctList - puncwanted | puncunwanted

def punctRemover(DF, columns):

for acol in columns:

DF[acol] = DF[acol].apply(lambda alist: [item for item in alist if item not in punctList])

Spell mistakes fix

It is noticed that in the dataset there are several misspells which affects the data quality. The library symspell do the job of fixing the spell mistakes. The algorithm is based on edit distance, and a special searching method to accelerate the process.

The algorithm: 1000x Faster Spelling Correction algorithm SeekStorm

Click here for codes

# the library also works on the punctuations, which we do not hope to happen.

# Therefore, we create a exception list where things in the list would not change

punctuationException = {'?','!','...'}

others = {'eh'}

symspellException = punctuationException | others

sym_spell = SymSpell(max_dictionary_edit_distance=2, prefix_length=7)

dictionary_path = pkg_resources.resource_filename(

"symspellpy", "frequency_dictionary_en_82_765.txt"

)

# term_index is the column of the term and count_index is the

# column of the term frequency

sym_spell.load_dictionary(dictionary_path, term_index=0, count_index=1)

def spellcheck(tokens):

checkedtokens = []

for token in tokens:

if (token not in symspellException) and (not token.isnumeric()):

try:

checkedtokens.append(sym_spell.lookup(token, Verbosity.CLOSEST, max_edit_distance=2)[0].term)

except:

checkedtokens.append(token)

else:

checkedtokens.append(token)

return checkedtokens

def spellCheckReplace(DF, columns):

for acol in columns:

DF[acol] = DF[acol].apply(spellcheck)

pre-process pipeline

We define all the cleaning processes as functions instead of directly doing it - so that we can create different levels of pre-process to fit certain structure of models.

EXAMPLE:

-

LSTM and CNN do consider the context of certain word - where some stop words would be kept.

-

If BOW or TF-IDF is used for text representation - the order of the words is not considered - pre-process can be deep, removing all the noisy texts.

Click here for codes

# Workflow of deep preprocess

def DeepPreprocess(df):

htmlEntityTran(df,['reviewText','summary']) # " => "

contractionExpansion(df,['reviewText','summary']) # I'm => I am

punctuationProcess(df,['reviewText','summary']) # add space before puncs; emoticon; normalize

lowercaseCountTranformer(df, ['reviewText','summary']) # A => a ; add count column

LemmatizationTransform(df,['reviewText','summary']) # smiled => smile; tokenized

stopWordRemove(df,['reviewTexttokenized','summarytokenized']) # delete "I"

punctRemover(df,['reviewTexttokenized','summarytokenized']) # delete meaningless punctuation

spellCheckReplace(df,['reviewTexttokenized','summarytokenized']) # graet => great

DeepPreprocess(dataset)

#DeepPreprocess(dataset)

#subData = pd.read_csv('test.csv')

#DeepPreprocess(subData)

#subData.to_csv('preprocessedtest_deep.csv')

#dataset.to_csv('preprocessedtrain_deep.csv')

# shallow preprocessing (for neural networks that can learn grammar)

def ShallowPreprocess(df):

htmlEntityTran(df,['reviewText','summary']) # " => "

contractionExpansion(df,['reviewText','summary']) # I'm => I am

punctuationProcess(df,['reviewText','summary']) # add space before puncs; emoticon; normalize

lowercaseCountTranformer(df, ['reviewText','summary']) # A => a ; add count column

LemmatizationTransform(df,['reviewText','summary'], mode = "shallow") # only tokenized

stopWordRemove(df,['reviewTexttokenized','summarytokenized'], deep = False) # delete "I"

punctRemover(df,['reviewTexttokenized','summarytokenized']) # delete meaningless punctuation

# run this to do shallow preprocess

# keep the proposition

# avoid using misspelling correction -

# it may do wrongly and convert useful information to something else

#subData = pd.read_csv('test.csv')

#ShallowPreprocess(dataset)

#ShallowPreprocess(subData)

subData.to_csv('preprocessedtest_deep.csv')

dataset.to_csv('preprocessedtrain_deep.csv')

data quality checker

This is used for comparing between the original text and the modified/cleaned text.

Keep iterating the process to improve.

Click here for codes

a = random.randint(0,9000)

#a = 8983

print(a)

print(dataset['reviewTexttokenized'].iloc[a])

print("==============================")

print(dataset['summarytokenized'].iloc[a])

print("==============================")

print(dataset['reviewText'].iloc[a])

print("==============================")

print(dataset['summary'].iloc[a])

print("******************************")

print(originData['reviewText'].iloc[a])

print("==============================")

print(originData['summary'].iloc[a])

- counting the length of different features

from collections import Counter

counter = Counter()

MaxLengthReview = 0

MaxLengthSummary = 0

for row in dataset['reviewTexttokenized']:

counter.update(row)

if len(row) > MaxLengthReview:

MaxLengthReview = len(row)

for row in dataset['summarytokenized']:

counter.update(row)

if len(row) > MaxLengthSummary:

MaxLengthSummary = len(row)

print('MaxLengthReview', MaxLengthReview)

print('MaxLengthSummary',MaxLengthSummary)

- Check the punctuation to seek for improvement.

for akey in sorted(counter.keys()):

for char in akey:

a = 0

if char in string.punctuation:

a = a + 1

if a > 0:

# if counter[akey]>=2:

print(akey, '|===>', counter[akey])

After data is pre-processed, a cleansed dataset is generated and stored in .csv format. and modelling would be done in seperated files.

III Before Modelling

loading data etc..

Click here for codes

#Text is pre-processed

dataset = pd.read_csv('preprocessedtrain_deep.csv',

index_col = 0,

converters = {'reviewTexttokenized': eval,

'summarytokenized': eval}

)

# add more 2 features - the percentage of capital letters

dataset['reviewCapitalPer'] = dataset['reviewTextUpperCount'] / dataset['reviewTextCharCount']

dataset['summaryCapitalPer'] = dataset['summaryUpperCount'] / dataset['summaryCharCount']

Text Data Representation

Very common methods to represent text data include BoW (Bag of Words), TF-iDF (Term Frequency-Inverse Document Frequency).

TF-iDF considers whether the word is important, while BoW only counts the occurrences. - TF-iDF usually work better in practice.

- Data leakage problem

TF-iDF should be done after the splitting of train/valid/test data - otherwise there would be data leakage risk.

Why?

\[TF(term\ frequency) = \frac {count\ of\ term\ in\ document }{number\ of\ words\ in\ document}\] \[iDF(inverse\ Document\ Frequency) =log( \frac{number\ of\ documents}{number\ of\ documents\ that\ have\ the\ term})\]If test/valid data is not splitted in advance, TF-iDF calculation would contain information of the unseen data.

To prevent data leakage - we use pipeline to make sure the TF-iDF calculation is after splitting.

Stratified Split

The imbalanced dataset pose a challenge for the model because most algorithms hold the assumption that each class has an equal number of examples.

A Gentle Introduction to Imbalanced Classification (machinelearningmastery.com)

In this project, imbalance is not severe, but for accuracy of the model, we use stratified split - making sure that the proportion of each class in test/valid/train dataset would be the same.